Computing technologies have revolutionized the world, from how we grow food to our social interactions. At the core of this revolution is computing hardware, the shrinking of which has allowed for powerful computation in the palm of your hand. Unfortunately, Moore’s law is coming to an end and we will no longer be able to build more powerful computers using traditional CMOS-based hardware. What other bases for computing hardware are out there? The Computing Community Consortium (CCC) organized the Next Generation Computer Hardware scientific session at the 2020 American Association for the Advancement of Science (AAAS) Annual meeting in February to discuss this topic and share some potential avenues for future research.

Mark Hill

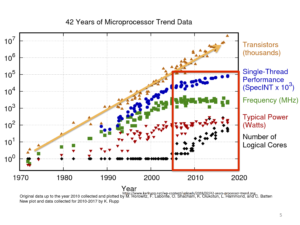

CCC Council Chair Mark D. Hill (University of Wisconsin) moderated the session, which included Todd Hylton (UC San Diego) and CCC Council Member Tom Conte (Georgia Tech) as speakers. Hill opened the session by explaining the current state of computing hardware. Computers are organized into layers, or a “stack,” with hardware, including microarchitecture, logic design, and transistors, on the “bottom” of the stack and software on the “top.” The number of transistors in a microprocessor has continued to climb linearly over the past forty years, however, single-thread performance and the typical power has climbed only logarithmically. This trend reflects the end of Moore’s law; thus, continuing to improve computer performance will require novel hardware methods.

42 Years of Microprocessor Trends

Tom Conte

Next, Tom Conte explored novel computing substrates in his presentation Into The Wild: Radically New Computing Methods for Science. First Conte corrected a misconception about Moore’s law. Many people believe that Moore’s law says that computers get twice as fast every two years, however, Moore’s law is really an observation, made by Intel co-founder Gordon Moore, that if $1 gets you 1,000 transistors today then in approximately two years $1 will get you 2,000 transistors. The number of transistors used to track 1 to 1 with computer speed, but as shown in Mark Hill’s presentation, that relationship no longer holds. Digital accelerators have provided a stopgap solution, but Conte highlighted several alternative computing models that offer an opportunity for continued performance improvement.

One such model is cryogenic computing, where the computing system is cooled to 4 degrees Kelvin (-269.15 °C/-452.47 °F) increasing the conductivity of the system. Cryogenic computing has been show to operate at 1/100th the power of CMOS including the cryocooling overhead. IARPA’s Cryogenic Computing Complexity (C3) program has been exploring this technology. The C3 webpage states, “While, in the past, significant technical obstacles prevented serious exploration of superconducting computing, recent innovations have created foundations for a major breakthrough. Studies indicate that superconducting supercomputers may be capable of 1 PFLOP/s for about 25 kW and 100 PFLOP/s for about 200 kW, including the cryogenic cooler. Proof at smaller scales is an essential first step before any attempt to build a supercomputer.”[1]

Conte also shared two non-Von Neumann architectures that have emerged as promising. The first, and most popular, is quantum computing, wherein quantum entanglement and quantum superposition are used to perform computation through qubits. However, as Conte explained, qubits are fragile and vulnerable to errors, and quantum error correction is expensive. In order to make a logical qubit, which can be used to reliably perform computation, you must aggregate hundreds of noisy qubits. Currently, the best quantum computers only have several dozen qubits in them; so much work is still needed to make these systems reliable. In 2018 the CCC held a workshop on quantum computing in which states “Preskill has coined the phrase Noisy Intermediate-Scale Quantum (NISQ) to refer to the class of machines we are building currently and for the foreseeable future, with 20-1000 qubits and insufficient resources to perform error correction. Increasingly, substantial research and development investments at a global scale seek to bring large NISQ and beyond quantum computers to fruition, and to develop novel quantum applications to run on them.”[2] You can read the workshop report here.

The second non-Von Neumann architecture Conte described is analogous computing, which uses annealing to solve problems. In metallurgy, annealing is a process that controller to cooling of alloys to better obtain certain properties. When applied to computing, the idea is allows wide searches for good solutions early (like high temperature) and then proceeds to narrow searches (like low temperature) later.

Some other computer models presented by Conte include:

- resistive crossbar networks

- open system thermodynamics

- RNA/DNA

- and coupled oscillators

Todd Hylton

Following Conte, Todd Hylton shared his presentation titled The Future of Computing: It’s All About Energy. Hylton and Conte were both organizers of the CCC’s 2019 Thermodynamic Computing workshop. In his presentation Hylton outlined his inspiration for thinking about computing thermodynamically and predicted what a thermodynamic computer (TDC) might be able to do in the future.

Currently, computing uses about 5% of the US electrical power production and semiconductor fabrication is alarmingly expensive—what if we could build cheaper, more efficient computers? This is the potential promise of thermodynamic computers.

From the Thermodynamic Computing workshop report: “We propose that progress in computing can continue under a united, physically grounded, computational paradigm centered on thermodynamics… thermodynamics might drive the self-organization and evolution of future computing systems, making them more capable, more robust, and less costly to build and program. As inspiration and motivation, we note that living systems evolve energy-efficient, universal, self-healing, and complex computational capabilities that dramatically transcend our current technologies. Animals, plants, bacteria, and proteins solve problems by spontaneously finding energy efficient configurations that enable them to thrive in complex, resource-constrained environments. For example, proteins fold naturally into a low-energy state in response to their environment. In fact, all matter evolves toward low energy configurations in accord with the Laws of Thermodynamics…Herein we propose a research agenda to extend these thermodynamic foundations into complex, non-equilibrium, self-organizing systems and apply them holistically to future computing systems that will harness nature’s innate computational capacity. We call this type of computing “Thermodynamic Computing” or TC.” (p. 1).

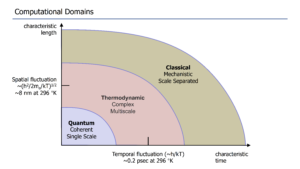

Hylton said that Thermodynamic Computing exist in a midpoint between the Quantum and Classical computing domains. “In the classical domain, fluctuations are small compared to the smallest devices in a computing system (e.g. transistors, gates, memory elements), thereby separating the scales of “computation” and “fluctuation” and enabling abstractions like device state and the mechanization of state transformation that underpin the current computing paradigm. The need to average over many physical degrees of freedom in order construct fluctuation free state variables is one reason that classical computing systems cannot approach the thermodynamic limits of efficiency,” while in the quantum domain, “fluctuations in space and time are large compared to the computing system. While the classical domain avoids fluctuations by “averaging them away,” the quantum domain avoids them by “freezing them out” at very low temperatures (milli-Kelvin for some systems).” (pp. 8-9).

Computational Domains: Classical, Thermodynamic, and Quantum

In contrast, in the thermodynamic domain “fluctuations in space and time are comparable to the scale of the computing system and/or the devices that comprise the computing system…The thermodynamic domain is inherently multiscale and fluctuations are unavoidable. Presumably, this is the domain that we need to understand if our goal is to build technologies that operate near the thermodynamic limits of efficiency and spontaneously self-organize, but it is also the domain that we carefully avoid in our current classical and quantum computing efforts.” (pp. 8-9). During his presentation Hylton demonstrated a number of self-organizing structures in nature such as lightning, galaxies, and the slime mold, a single celled organism that will stretch itself in maximally efficient ways to reach food (see video of the slime mold below below).

During the Q&A one attendee asked the panel about the potential of wafer scale integration for improving computing performance. In wafer scale integration, rather than cutting the silicon wafer into smaller pieces to make individual chips, you print all of the chips on a single wafer. Tom Conte responded that wafer scale integration is such a great idea that it’s old. In the 1970s and 80s there were attempts at building wafer scale processors but those projects failed. Conte cited the work that Cerebas has does in building wafer scale systems that are being used for parallel programing.

A number of attendees asked questions related to the likelihood that certain computing substrates will prove effective at improving performance over CMOS. Hylton said that though some show more promise than others, in his opinion, he hopes for greater investment in speculative ideas overall, recognizing that many of them won’t work out and others might take a generation to see benefits, but ultimately novel computing hardware could revolutionize the world in the same way CMOS-based digital computing has.

View all the slides and learn more about the “Next Generation Computing Hardware” session on the CCC @ AAAS webpage.

Related Links:

- Thermodynamic Computing, CCC workshop report: https://cra.org/ccc/wp-content/uploads/sites/2/2019/10/CCC-Thermodynamic-Computing-Reportv3.pdf

- Thermodynamic Neural Network by Todd Hylton https://arxiv.org/abs/1906.01678

- Chaotic dynamics in nanoscale NbO2 Mott memristors for analogue computing by Suhas Kumar, John Paul Strachan, & R. Stanley Williams https://www.nature.com/articles/nature23307

- Catalyzing Computing Podcast Episodes 3 & 4: What is Thermodynamic Computing? https://cra.org/ccc/podcast/#episode4

[1] https://www.iarpa.gov/index.php/research-programs/c3

[2] M. Martonosi and M. Roetteler, with contributions from numerous workshop attendees and other contributors as listed in Appendix A. Next Steps in Quantum Computing: Computer Science’s Role. Computing Community Consortium https://cra.org/ccc/wp-content/uploads/sites/2/2018/11/Next-Steps-in-Quantum-Computing.pdf